Urgent message: Many pediatric urgent care centers lack 24/7 pediatric radiologist coverage and rely on the urgent care provider for initial interpretation and subsequent clinical management. If misdiagnosed, this could represent a potential patient safety concern.

Allison Wood, DO; Anne McEvoy, MD; Paul Mullan, MD, MPH; Lauren Paluch, MPA, PA-C; Brynn Sheehan, PhD; Jiangtao Luo, PhD; Turaj Vazifedan, DHSc; Theresa Guins, MD; Jeffrey Bobrowitz, MD; and Joel Clingenpeel, MD

Citation: Wood A, McEvoy A, Mullan P, Paluch L, Sheehan B, Luo J, Vazifedan T, Guins T, Bobrowitz J, Clingenpeel J. Discrepancy rates in radiograph interpretations between pediatric urgent care providers and radiologists. J Urgent Care Med. 2021;15(11):19-22.

INTRODUCTION

There has been an increase in the number of patients utilizing urgent care facilities as families seek to lower healthcare costs, increase convenience, and avoid long wait times and overcrowding typically seen in the emergency department.1 The number of pediatric urgent care centers has been increasing in many metropolitan areas, offering a new method of delivering medical care to parents with acute care needs for their children.2

Although there are limitations to the care urgent care centers can provide from an acuity perspective compared with the ED, most have the capability of performing plain radiographs to evaluate common pediatric conditions, including pneumonia and fractures. Often, pediatric EDs or pediatric urgent care centers do not have pediatric radiologist coverage during all operating hours and therefore must rely on the expertise of the ordering provider for initial interpretation of radiographs.3 The variety of providers with differing roles and levels of expertise in a pediatric urgent care center (eg, advanced practice providers [APPs], board-certified pediatricians, and pediatric emergency medicine physicians) could contribute to variations in the accuracy of radiograph readings.

There have been numerous studies evaluating the discrepancy rates in the reading of plain radiographs between emergency physicians and radiologists in both adult and pediatric ED settings. In ED studies involving pediatric patients, the discrepancy rate has ranged between 1% and 28%.4-11 Clinically significant discrepancy (CSD) rates, defined as a radiographic discrepancy requiring a subsequent change in medical management, has ranged from 0.41% and 6.3%.4-11 In several studies, chest radiographs were shown to be the most commonly misinterpreted study.4,6-10 Pediatric orthopedic radiographs were also frequently misinterpreted between 8% and 21% of the time, by non-radiologists due to the presence of growth plates.4-11 One study delineated that the discrepancy rate was higher in less experienced physicians.6

The main aim of the current study was to describe the overall discrepancy rate and the CSD rate in pediatric chest and orthopedic radiographs, between pediatric urgent care providers and pediatric radiologists. We also sought to compare the discrepancy rates of physicians and APPs. To the best of our knowledge, this is the first study to evaluate discrepancy rates of pediatric radiographs in an urgent care setting.

METHODS

This observational, retrospective study reviewed plain radiographs (chest, clavicle, upper extremity, and lower extremity), ordered between the hours of 17:00 and 23:00 from January 2016 to December 2018. Data were collected from four pediatric urgent care centers within one children’s health network. The pediatric urgent care centers treat patients from newborns up to 21 years of age. The centers are located approximately 10 to 25 miles away from a tertiary academic, freestanding children’s hospital in a metropolitan area in the United States. Other imaging modalities (eg, computed tomography, magnetic resonance imaging, ultrasound) as well as pelvic, abdominal, and spinal x-rays were excluded from analysis. Patients were also excluded if they were transferred to the ED due to clinical condition.

During the hours of 17:00 to 23:00 of the study period, pediatric urgent care providers (ie, APPs, board-certified pediatricians, or board-certified pediatric emergency medicine physicians) were responsible for providing the preliminary reading on plain radiographs and determining the initial plan of care and follow-up. On the following morning, a board-certified pediatric radiologist reviewed all films obtained during this timeframe and placed a final read within the chart. If there was a discrepancy in readings, the radiograph study was placed in an electronic discrepancy folder within the urgent care’s shared computer system. Each day, the urgent care provider in charge at each center reviewed this folder for any discrepant radiographs from the prior night. The urgent care provider then notified the family of the discrepancy and discussed whether any changes in management were required. The pediatric radiologist’s interpretation was used as the gold standard for interpretation purposes. The urgent care provider would then document the discussion with the family in the electronic health record, including any changes in management or follow-up recommendations.

Three research team members divided the sample of all discrepant charts, in which a radiology discrepancy was identified during the study period and reviewed every chart. During chart reviews, if the chart indicated that the urgent care provider noted the correct diagnosis or documented the correct finding in the medical decision-making section, these cases were excluded from chart review analysis as they were not true discrepancies. In the case of a true discrepancy, the discrepancy was denoted as a false positive (ie, the abnormality was noted by the urgent care provider but not by the pediatric radiologist) or a false negative (ie, the abnormality was noted by the pediatric radiologist but not by the urgent care provider). Charts were then reviewed to determine whether there was a required change in clinical management including any changes in follow-up, changes in therapy, or returns for evaluation. If the family could not be reached, or if there was not a clear statement as to how clinical management changed, it was designated as not documented. If review of a discrepant patient’s chart revealed that the patient was deceased at the time of the chart review (all charts were reviewed at a minimum of 1 year after the index urgent care center visit), it was recorded as potentially related to the care received in the urgent care center if the death occurred within 1 year of the index visit.

Data were presented as frequencies and percentages. Chi-square test or Fisher’s Exact test were used to compare the rate of discrepancy between APPs and physicians. A 5% sample of the true discrepancies was randomly selected for inter-rater reliability among research members performing chart review. Fleiss’ kappa was performed to determine inter-rater reliability. All statistical tests were performed using R 3.6.3 (Vienna, Austria). All statistical tests were two-sided, and p<0.05 was considered as statistically significant.

This study was approved by the Institutional Review Board (IRB) at Eastern Virginia Medical School.

RESULTS

A total of 17,282 radiographs were performed between the times of 1700 and 2300 during the study period at the four pediatric urgent care centers. There were 22 films that were excluded from analysis, as these patients were directly transferred from urgent care to the ED. Of the remaining 17,260 films, there were 4,712 chest films, 203 clavicle films, 6,270 lower extremity films, and 6,075 upper extremity films; of these, the interpretations were provided by physicians (n=8152, 47.2%), physician assistants (n=6,104, 35.4%), and nurse practitioners (n=3,004, 17.4%). The mean patient age was 9.1 years (SD 5.1 years); 50.1% were female. Prior to conducting discrepancy analyses, 78 (5%) charts were reviewed by three research team members to assess inter-rater reliability. There was a moderate degree of agreement between reviewers with a kappa score of 0.77.

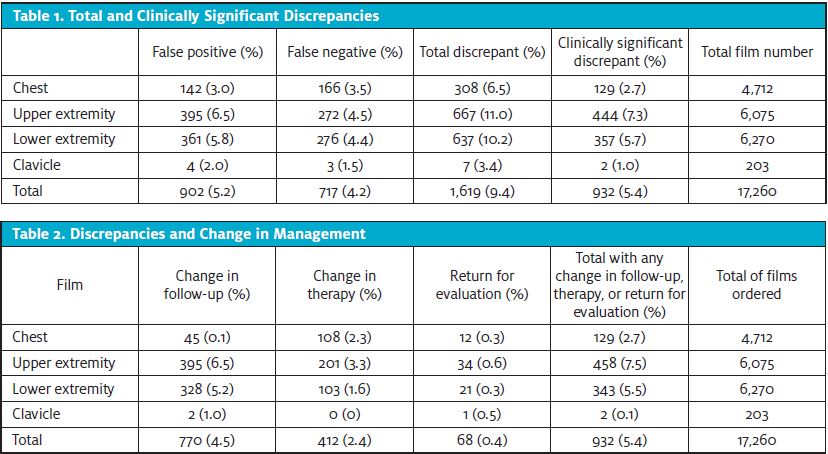

A total of 1,706 films were designated as discrepant. After chart review, 87 of these films were found to not be true discrepancies, leaving a total of 1,619 true discrepancies for with an overall discrepancy rate of 9.4%. Of the discrepancies, there were 902 false positives (5.2%) and 717 false negatives (4.2%) (Table 1).

Of the 1,619 discrepant films, 1,346 (83.1%) had documentation of whether change in follow-up was required and 1,016 (62.8%) had documentation of whether change in therapy was required. Of the 17,260 films performed, the total CSD rate was 5.4% (n=932); none resulted in any mortality (Table 2).

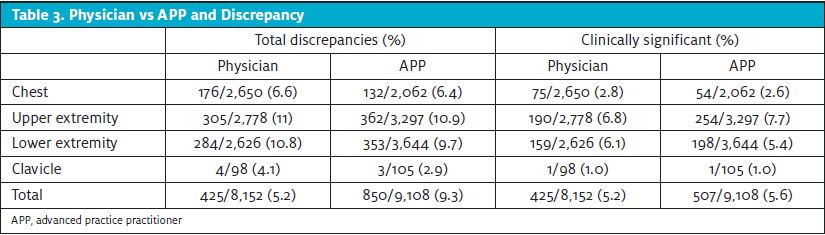

The total number of discrepancies by physicians was comparable with APPs and did not differ significantly. Similarly, the CSD rate was comparable and no statistically significant differences were noted (Table 3).

DISCUSSION

With the numbers of pediatric urgent care centers increasing and greater utilization of these facilities by families seeking convenient, nonemergency care, it is important to evaluate the ability of urgent care providers to accurately interpret radiographs when pediatric radiologists are not available.

This retrospective study is the first one that we are aware of to evaluate the discrepancy rate and CSD rate among chest, clavicle, upper extremity, and lower extremity radiographs between pediatric urgent care providers and pediatric radiologists. Current study findings suggest an overall discrepancy rate of 9.4%, which is comparable to other studies that have evaluated discrepancies among pediatric radiographs (ranging from 1% to 28%, Med 11.9%).4-11 The CSD rate of 5.4% was also comparable to previous studies which range from 0.4-6.3% (Med 1.3%).4-11

One difference when comparing the current study to previous work is that the current study only included chest, upper extremity, lower extremity, and clavicle radiographs, whereas other studies also included axial skeleton and abdominal radiographs.4-8,9-11 Notably, the axial and abdominal radiograph discrepancy rates in these studies were generally lower than the discrepancy rates of the other films.4-8,9-11

In previous research, chest radiographs were found to be the most frequently discrepant with a range of 10% to 41.7% (Med 25.3%).4,6-10 Our study, however, found upper extremity radiographs to have the highest discrepancy rate (3.9%) as well as the highest CSD rate of 7.3%. This was followed by lower extremity (3.7% and 5.7%, respectively), chest (1.8% and 2.7%), and clavicle (0.04% and 1%). These findings may be explained due to differences in frequency of radiograph type ordered; however, it is also important to note the continual challenge in interpreting pediatric orthopedic radiographs, specifically due to the presence of growth plates as well as subtle signs that could indicate an underlying occult fracture.

LIMITATIONS

Limitations of this study include a study cohort that was limited to a network of pediatric urgent care centers associated with a single tertiary care pediatric health system. Despite the multiple pediatric urgent care centers included, data may not be generalizable to other pediatric urgent care practices and systems. Given that this was a retrospective study, it is unknown whether providers (either within the same provider role or between different roles) had discussed radiograph interpretations among each other prior to the management and discharging of patients. In addition, despite there being no documentation within the medical record system of mortality within 1 year of urgent care visit, it is possible that patients could have presented to an outside facility during that time frame which may have resulted in mortality. However, our facility is the only children’s hospital in the region and receives all critically ill patients as transports from the regional emergency departments, so it is unlikely we missed any mortality events. Furthermore, documentation of change in management was clearly noted in the majority of patient charts; however, there were charts that could not be included in our analysis due to lack of a clear description as to how clinical management did or did not change. However, this is a common limitation in data abstraction in retrospective studies and occurred in a limited number of charts.12 When documentation was provided, the kappa score showed moderate inter-rater reliability, which strengthened our study’s findings.

CONCLUSION

Of the studies that have evaluated discrepancy in the pediatric population, the low rate of clinically significant findings has allowed emergency physicians to safely disposition patients without leading to significant morbidity or mortality. In addition to similar discrepancy and clinically significant rates, this study found there were no statistically significant differences in rates between physicians and APPs. These findings suggest that a pediatric urgent care center, without continuous radiologist coverage, can provide relatively low discrepancy rates for pediatric patients requiring radiographs. Findings also provide supportive evidence for urgent cares to operationalize their staffing and consultative services in a model that provides high-value care to the patient population being served.

REFERENCES

- Saidinejad M, Paul A, Gausche-Hill M, et al. Consensus statement on urgent care centers and retail clinics in acute care of children. Pediatr Emerg Care. 2019;35(2):138-142.

- Sankrithi U, Schor J. Pediatric urgent care—new and evolving paradigms of acute care. Pediatr Clin North Am. 2018;65(6):1257-1268.

- Lowe R, Abbuhl S, Baumritter A, et al. Radiology services in emergency medicine residency programs: a national survey. Acad Emerg Med. 2002;9(6):587-94.

- Fleisher G, Ludwig S, McSorley M. Interpretation of pediatric x-ray films by emergency department pediatricians. Ann Emerg Med. 1983;12(3):153-158.

- Shirm SW, Graham CJ, Seibert JJ, et al. Clinical effect of a quality assurance system for radiographs in a pediatric emergency department. Pediatr Emerg Care. 1995;11(6):351-354.

- Walsh-Kelly CM, Melzer-Lange MD, Hennes HM, et al. Clinical impact of radiograph misinterpretation in a pediatric ED and Effect of Physician Training Level. Am J Emerg Med. 1995;13(6):669.

- Taves J, Skitch S, Valani R. Determining the clinical significance of errors in pediatric radiograph interpretation between emergency physicians and radiologists. CJEM. 2017;20(03):420-424.

- Soudack M, Raviv-Zilka L, Ben-Shlush A, et al. Who should be reading chest radiographs in the pediatric emergency department? Pediatr Emerg Care. 2012;28(10):1052-1054.

- Klein EJ, Koenig M, Diekema DS, Winters W. Discordant radiograph interpretation between emergency physicians and radiologists in a pediatric emergency department. Pediatr Emerg Care. 1999;15(4):245-248.

- Higginson I, Vogel S, Thompson J, Aickin R. Do radiographs requested from a paediatric emergency department in New Zealand need reporting? Emerg Med Australas. 2004;16(4):288-294.

- Simon HK, Khan NS, Nordenberg DF, Wright JA. Pediatric emergency physician interpretation of plain radiographs: is routine review by a radiologist necessary and cost-effective? Ann Emerg Med. 1996;27(3):295-298.

- Gilbert EH, Lowenstein SR, Koziol-McLain J, et al. Chart reviews in emergency medicine research: Where are the methods? Ann Emerg Med. 1996;27(3):305-308.

Author affiliations: Allison Wood, DO, Children’s Hospital of the King’s Daughters, Eastern Virginia Medical School. Anne McEvoy, MD, Children’s Hospital of the King’s Daughters; Eastern Virginia Medical School. Paul Mullan, MD, MPH, Children’s Hospital of the King’s Daughters; Eastern Virginia Medical School. Lauren Paluch, MPA, PA-C, Children’s Hospital of the King’s Daughters; Eastern Virginia Medical School. Brynn Sheehan, PhD, Eastern Virginia Medical School. Jiangtao Luo, PhD, Eastern Virginia Medical School. Turaj Vazifedan DHSc, Children’s Hospital of the King’s Daughters. Theresa Guins MD, Children’s Hospital of the King’s Daughters. Jeffrey Bobrowitz, MD, Children’s Hospital of the King’s Daughters. Joel Clingenpeel, MD, Children’s Hospital of the King’s Daughters, Eastern Virginia Medical School.

Read More on Pediatric Urgent Cares

- Using Simulations And Skills Stations To Enhance Emergency Preparedness In Pediatric Urgent Care

- Why Is The Waiting Room Still Empty? Perspectives From A Pediatric Urgent Care Physician

- Pediatric Urgent Care—Specialized Medicine On The Front Lines

- Preparedness For Emergencies In Pediatric Urgent Care Settings